Appendix 2: Use Case Example

A grocery store company has ten store locations across Florida. This parent company wants to accurately forecast daily sales for 100 different products such as bread, hamburgers, and soda at each location. Provided with daily historical sales over the last three years, SensibleAI Forecast generates hundreds to thousands of performance-enhancing features to train and select the most accurate forecasting model possible to optimize downstream business processes.

There are four main feature types recognized by SensibleAI Forecast. The feature types are largely categorized by how they are created or gathered.

Common Definitions

Time Series Forecasting: Time series forecasting uses a model to predict future values based on previously observed values. A simple example of this predicting future grocery store hamburger sales based on historical sales over time.

Other features (see below) could be incorporated to improve the predictive capability of a model.

SensibleAI Forecast Project: A SensibleAI Forecast project is a collection of targets, data sources, and model configurations.

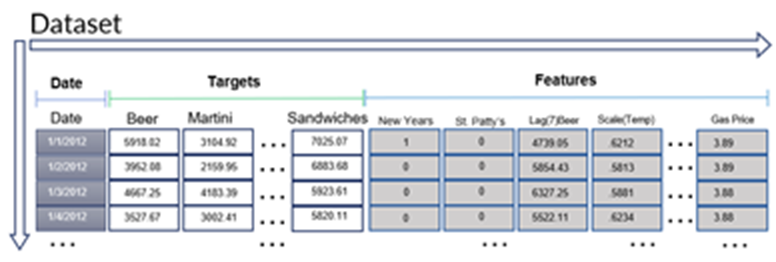

Targets and Features: A target is a subject that is to be forecasted and is represented by a single series of historical data (such as beer, martini, and sandwich sales).

A feature is a measurable characteristic of a phenomenon that can be represented as a data series that can be leveraged to improve the predictive accuracy of a model. Multivariate models can learn complex non-linear relationships between a target variable and multiple feature variables.

In this example, machine and statistical models produce forecasts for each target such as beer, martini, and sandwiches. Models that produce the forecasts can use feature variables such as New Year's or Gas Price to enhance forecast accuracy.

Model Training: In machine learning context, model training is the process of providing machine learning algorithms with data used to learn trends and patterns.

Iterations (Machine Learning): In machine learning, iterations are the number of times an algorithm's parameters are updated. Iterations are done to achieve optimal algorithm performance.

Hyperparameters:

-

Cannot be learned from the data.

-

Are tunable (hyperparameter tuning). Different hyperparameter tunings result in different model parameter optimizations. This leads to different levels of accuracy.

-

Directly control the behavior of the training algorithm.

-

Have significant impact on the performance of the model being trained.

-

Express high-level structural settings for algorithms.

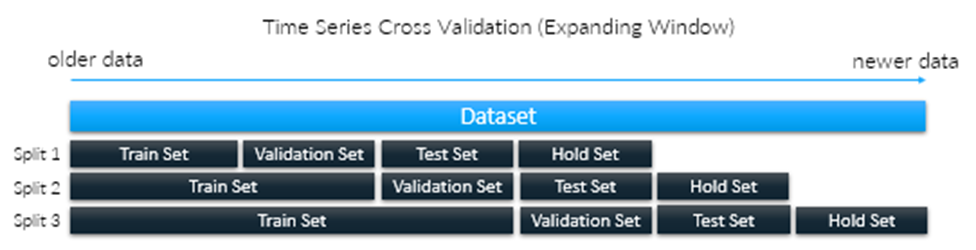

Cross-Validation: A technique that tests statistical or machine learning model performance prior to putting the model into production. Cross-validation works by reserving specific data set samples on which the model is not trained. The model makes predictions on these untrained samples to evaluate its accuracy. Cross-validation provides understanding of how well a model performs before putting the model into production.

Cross-validation is used to :

-

Choose the best hyperparameters (the best variation) of a model.

-

Compare different models to determine the best model to deploy.

A cross-validation for a time series uses methods such as walk-forward cross-validation (or sliding-window) and expanding-window cross-validation (or forward-chaining). The following images illustrate these methods.

Meta-Learning: Machine learning algorithms that learn from the output of other machine learning algorithms. Rather than increasing the performance of a single model, several complementary models can be combined to increase model performance.

Ensembles: A meta-learning approach that uses the principle of creating a varied team of experts. Ensemble methods are based on the idea that, by combining multiple weaker learners, a stronger learner is created.

Boosting: An ensemble technique that sequentially boosts the performance of weak learners to construct a stronger algorithmic ensemble as a linear combination of simple weak algorithms. Each weak learner in the sequence tries to improve or correct mistakes made by the previous learner. At each iteration of the Boosting process:

-

Re-sampled data sets are constructed specifically to generate complementary learners.

-

Each learner's vote is weighted based on its past performance and errors.

Some of the most popular boosting techniques include:

-

AdaBoost (Adaptive Boosting)

-

Gradient Boosting

-

XGBoost (Extreme Gradient Boosting)

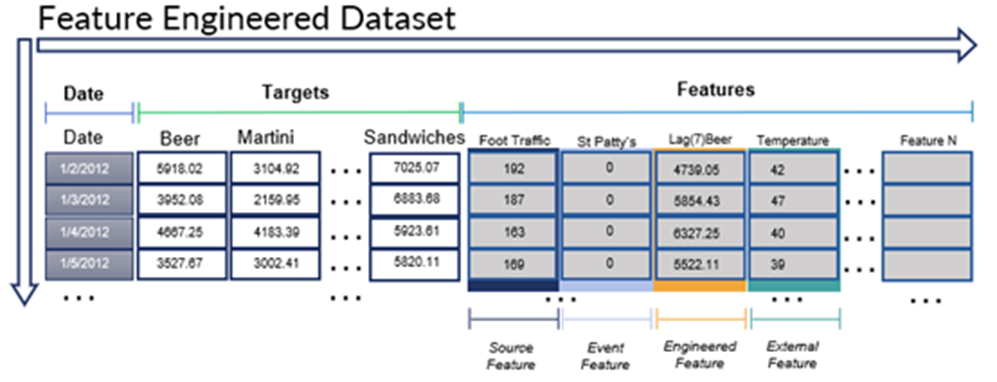

Feature Types

There are four main feature types recognized by Sensible ML. Feature types are largely categorized by how they are created or gathered.

Source Feature: A data column in the source data set that enters SensibleAI Forecast. Specify source features in the Data Features page of SensibleAI Forecast.

You can create additional features if you have already collected features outside of SensibleAI Forecast and you know these features help the modeling process. However, creating additional features in SensibleAI Forecast is not necessary and not heavily used since so much of SensibleAI Forecast is geared towards generating external features, event features, and engineered features for you. Source features are largely considered a Data Science function of Sensible Machine Learning.

External Feature: A data column that is grafted onto the source data and not originally included in the source data. External features are largely found through external data APIs such as weather or macro-economic data. The most common ways to graft an external feature to the source data is by leveraging the Location capability in SensibleAI Forecast that fetches features such as weather data.

Currently only weather external features are supported and is limited to a forecast range less than 15 days.

Event Feature: A special subset of an external feature generated using the Event Builder in SensibleAI Forecast. An event feature is always a binary categorical data column generated from the events included in the SensibleAI Forecast Model Build phase.

Event Features have a profound positive effect on model performance.

Engineered Feature: A data column built by augmenting and transforming existing columns in the source data as well as other previously engineered features. Examples of this transformation process include cleaning missing values, lagging, moving averages, time breakdowns, and scaling values in an existing feature to create new engineered features.

Typically, a huge part of a data scientist's time goes toward manufacturing engineered features to improve overall model performance. SensibleAI Forecast creates engineered features by autonomously running statistical column transformations against each column in the source data set. This genetic algorithm style creation of engineered features leads to creating thousands of unique engineered features that SensibleAI Forecast can choose from and leverage in the modeling process.

Model Types

Univariate (statistical) models: A model that does not accept external variables to make predictions. Univariate models are used when there are not many data patterns to learn from, such as with one or two seasons.

Multivariate (machine learning) model: A model that leverages external variables in its predictions. These models are used when there are many data patterns to learn from, such as multiple seasons, anomalies, and a trend.

Multi-series model: A type of multivariate model that is trained to make predictions on a group of targets. A multi-series model requires data to be pivoted in a special long-form data format.

Single series model: A model that is trained to make predictions on a single target. A single series model can be a multivariate model or univariate model.

Baseline model: A type of naïve model that emulates what traditional forecasting methodologies may look like. A baseline model acts as an initial comparison benchmark for more intelligent models.