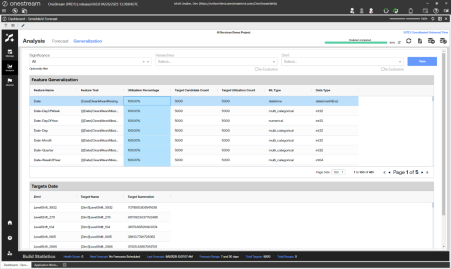

The Generalization view gives insight into how widely used the features were across deployed targets. The Features Generalization grid in this view is a similar view to the Pipeline Features Generalization view. The use of different features displays as a percentage of total targets for which they are eligible.