In-Memory Workflow Imports

Direct Load Workflow Import

Workflow Import Type is optimized for performance by combining the Parse/Transform, Validate Transformations and Intersections, and Load Cube into a single Workflow process. This consolidation of processes, and resulting performance gains, is achieved by functioning in-memory and bypassing the overhead of writing and storing Source and Target record details to Stage database tables. The in-memory processing limits certain functionality and therefore may not be an appropriate solution for all model requirements. All other Workflow functionality remains as part of the Direct Type, such as all Business Rules, Transformation Rules and Derivative Rules.

Direct Load Use Cases

The Direct Load Workflow is designed for data that has a high frequency of change and does not demand durability for audit and history.

Common Uses

-

Data Integrations where the OneStream Metadata and Source System are mirrored, allowing “* to * “ Transformation rules to pass-through all records, minimizing the need Drill-Down or Transformation analysis.

-

Data that is “disposable” in nature. Typically, this may be data that has a high frequency of changes and may only be valid for a short time. Perhaps only valid for one to seven days.

-

High-volume data loads, such as nightly batch loading, where optimal performance is desired. Such data is commonly deleted and reloaded frequently.

-

Extended Application data moves, where data from a detailed application feeds a summarized target application.

-

Where target data in OneStream is not required to be durable “book-of-record” data.

Important Limitations

A key differentiator of the Direct Load is that it does not store source and target records in Stage Database tables. This, by design, will eliminate the audit and historical archiving of Workflow Activity. Other limitations as a consequence of the in-memory Workflow are that the Drill-Down feature will not function to support analysis of records between the Finance and Stage tables.

-

Direct Load Type is not appropriate where:

-

Source file import history is required for historical reference.

-

Transformation Rule history is required for historical reference.

-

Drill-Down from Finance Engine is required.

-

Text based View member values are required to be file based.

-

Data loads are required to Append to prior Imports.

-

-

Direct Load Type does not support historical audit of workflow history, such as Import and Transformation Rule history.

-

Direct Load Type does not support Re-Transform as Import records are not stored data. Data must be re-loaded.

-

Transformation and Validation analysis and map correction is limited to 1000 error records per load.

-

Data files cannot load to Time and Scenarios beyond the current Workflow Scenario and Time. The data record's Time and Scenario being loaded must match the Workflow Scenario and Time.

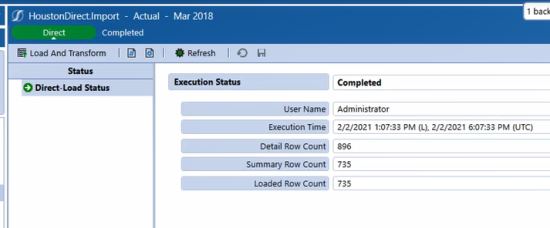

As Direct is an in-memory Workflow with only a single step for the data integration process, Load And Transform. The Direct Load Execution Status screen provides statistics to analyze the Workflow’s performance. These key statistics are helpful in determining if the Workflow design is supportive of best-practice designs to optimize application performance.

-

Detailed Row Count: The total number of Data Source and Derivative Rule records.

-

Summary Row Count: The total number of records summarized in the Transformation process.

-

Loaded Row Count: The recorded number of records loaded to the Finance Engine target Cube, which should always equal the Summary Row Count.

Direct Load Transformations and Validations

The Direct Load Workflow’s in-memory processing results in Transformation and Validation errors that are not stored being stored in a table. The total number of errors that can be processed and presented in Validation is limited to 1000 records. If the total number of errors exceeds 1000, the data must be re-loaded to re-execute the Transformation and Validation process to generate the next batch of errors, at a maximum count of 1000 records per load.

-

Total Direct Load Error Storage Limit = 50,000 records

-

Error Presentation Limit = 1000 records presented per Import load

Integrations with high complexity and mapping may benefit by having a “development”, Standard Workflow, to finalize the core Transformation Rules. A Standard Workflow supports pageable Validation and Intersection Error analysis, as well as the ability to Retransform source data that the Direct Load does not. The Standard Workflow also provides the Drill-Back from the Finance Engine to Stage that may streamline the data validation process. Once the core Transformation Rules are developed, a “production” Direct Load Workflow can be used, managing only the Validation exceptions.