The Model page in the Configure section lets you set various options that manage how the SensibleAI Forecast engine runs your modeling pipeline. These parameters tweak processes related to feature transformation and modeling.

Use this page to configure the model settings that run in the model pipeline. Adjusting these settings for your modeling project is optional. You can accept the default settings and run the pipeline.

View Options

View options on the Model page let you set options for the entire project or create different options for each target in the model build.

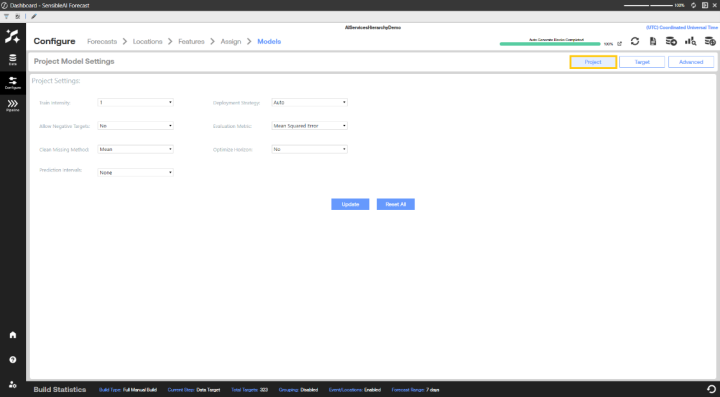

Set Project-wide Model Options

The Project View lets you set options that apply to all targets.

-

Set a value for Train Intensity. This parameter varies between 1 and 5. It is a scale of how many hyperparameter tuning iterations you want to occur during training. The higher the training intensity number, the more iterations are performed to find the optimal hyperparameters, which potentially leads to increased accuracy. However the run time for the modeling job increases with a higher intensity.

Typically, training intensities between 3 and 5 prove to achieve both high-quality accuracy and reasonable computing run times.

-

Set a value for Deployment Strategy. This specifies which models to deploy after the model training phase. Select Auto if you want SensibleAI Forecast to determine the most effective deployment strategy for your project.

NOTE: Selecting Top Model or Best 3 Models for the deployment strategy treats targets in groups as a whole, not as individual targets.

-

Set a value for Allow Negative Targets. The default setting of False ensures that SensibleAI Forecast does not predict values below zero for the project.

-

Set a value for Evaluation Metric. Specify the error metric that evaluates model accuracy during training and testing. See Appendix 3: Error Metrics for an error metric list and details.

-

Set a value for Clean Missing Method. This setting determines the method used to handle missing data values during data cleaning. Values are:

Interpolate: Fills in missing data using linear interpolation between the surrounding non-missing data points. For example [1.0, nan, 3.0, nan, -5.0] becomes [1.0, 2.0, 3.0, -1.0, -5.0]

Kalman: Fills in missing data using a Kalman filter.

Local Median: Fills in missing data using the median of the past n and future n days from the missing data point where n depends on the frequency. For example, (n=2) [1.0, nan, 2.0, 3.0, nan, 6.0] becomes [1.0, 2.0, 3.0, 3.0, 6.0]

Mean: Fills in missing data using the mean value of the non-missing data. For example [1.0, nan, 3.0, nan, 5.0] becomes [1.0, 3.0, 3.0, 3.0, 5.0]

Zero: Fills in missing data with 0. For example [1.2, nan, 3.0, nan, nan] becomes [1.2, 0, 3.0, 0, 0]

-

Set a value for Optimize Horizon. Set to Yes to split the test portion of the largest split into smaller chunks equal to the forecast range (or only 10 chunks, if the amount of chunks created by separating the split into forecast range sized chunks is more than 10).

These chunks are used in the model selection stage to perform individual predictions on each chunk. This results in a better test of which model performs best on the given forecast range, but also results in longer run times.

-

Set a value for Prediction Intervals. If set to a percentage, prediction intervals run for models and give a confidence band for predictions and back tests of the selected percentage.

-

Set a value for Compute Intervals for. This setting only displays if the prediction interval value is set to something other than None. It specifies which models’ prediction intervals are calculated in the prediction. Back tests compute prediction intervals for all models (if prediction interval value is set to something other than None).

-

Set a value for Model Ranking. This setting is only for projects that have a grouping strategy configured. Select from the following options:

-

Targets: Calculates the model ranking for each individual target in a project.

-

Groups: Calculates the model ranking for each group of targets in a project.

-

-

When you have made your settings, click Update at the bottom of the Project Settings pane.

-

A message box informs you that project level settings have been updated. Click OK to close the message box.

NOTE: Targets set to Project use these setting. Targets set to Advanced do not use these settings unless reset to Project.

TIP: Click Reset All to reset all targets to use the project level settings.

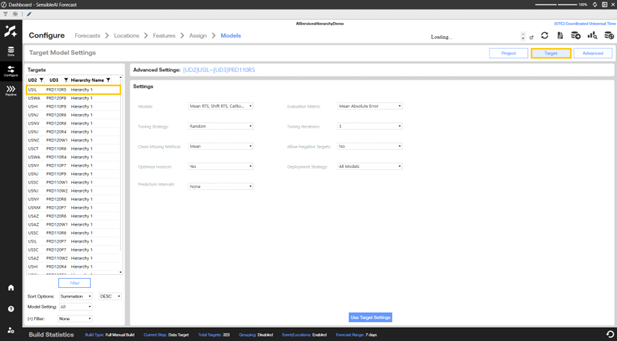

Set Model Options for Each Target

The Targets view lets you select advanced settings on a target-by-target basis. This provides finer-grained modeling during the pipeline run, but can increase the run time of the pipeline job.

To use the advanced settings:

-

In the Targets pane, select a target, then use the drop-downs to change setting values for the selected target or group. Settings are as follows:

Models: Specify the models offered by SensibleAI Forecast to be trained for a selected target.

Evaluation Metric: This is the same as the Project view.

Tuning Strategy: Select the strategy by which to tune hyperparameters during training.

Tuning Iterations: This is the same as Training Intensity on the Project view.

Clean Missing Method. This setting determines the method used to handle missing data values during data cleaning. Values are:

-

Interpolate: Fills in missing data using linear interpolation between the surrounding non-missing data points. For example [1.0, nan, 3.0, nan, -5.0] becomes [1.0, 2.0, 3.0, -1.0, -5.0]

-

Kalman: Fills in missing data using a Kalman filter.

-

Local Median: Fills in missing data using the median of the past n and future n days from the missing data point where n depends on the frequency. For example, (n=2) [1.0, nan, 2.0, 3.0, nan, 6.0] becomes [1.0, 2.0, 3.0, 3.0, 6.0]

-

Mean: Fills in missing data using the mean value of the non-missing data. For example [1.0, nan, 3.0, nan, 5.0] becomes [1.0, 3.0, 3.0, 3.0, 5.0]

-

Zero: Fills in missing data with 0. For example [1.2, nan, 3.0, nan, nan] becomes [1.2, 0, 3.0, 0, 0]

Allow Negative Targets: This is the same as the Project view.

Optimize Horizon: This is the same as the Project view.

Deployment Strategy: This is the same as the Project view.

Prediction Intervals: This is the same as the Project view.

Compute Intervals for: This is the same as the Project view.

-

-

Click Use Advanced at the bottom of the Settings pane. A message box notifies you that the selected advanced settings will be used for the selected target.

-

Click OK to close the message box. Sensible Machine learning then uses your advanced settings for that target when running the pipeline job.

-

Repeat the previous steps for any other targets in your project.

-

Once advanced settings are made for a target, if you want to change those advanced settings, use the previous steps to select the target and change values for each setting, then click Update at the bottom of the Settings pane.

TIP: Click Use Project at the bottom of the Settings pane to use the values set in the Project view for the selected target. Click Refresh  to see the targets with the Advanced model settings in the Targets pane.

to see the targets with the Advanced model settings in the Targets pane.

Set Advanced Settings

The Advanced view lets you select advanced settings for the project.

IMPORTANT: This is an advanced page and should only be used by users with a deeper understanding of cross-validation and modeling techniques.

Configure Cross Validation

The Cross Validation Settings pane sets the desired cross-validation configuration for the model build.

The first selection must be the cross-validation type which is one of the following:

Total Splits: Set the total number of splits to be used in the model build.

Custom Dates: Add rows to the table editor using the Add + button. Each split number must have every data set type listed as True on the Saved settings pane on the right. Data set types for a given split cannot overlap and must be contiguous. Each row must contain the following information:

-

Split Number: The split to which the row’s information corresponds.

-

Dataset Type: The data set type for which the row belongs.

-

Start (Datetime): The start date of the split portion. The range of valid dates is displayed at the top of the table.

-

End (Datetime): The end date of the split portion. The range of valid dates is displayed at the top of the table.

Custom Percentiles: The same as the Custom Dates settings but using start and end percentages for the data set portions instead of dates. The percentages are decimals that can be on or after zero and before or on one. For example 0.1 thru 0.8 for each split number.

NOTE: For custom dates and custom percentiles, the data set type column values should be in this order for each split number: Train, Validation, Test, Holdout.

Default: The default settings recommended by SensibleAI Forecast based on the data set.

Has Holdout: Can be configured to not use a holdout set based on the Boolean value selected.

Configure Avoidance Periods

The Avoidance Periods table contains portions of the data set that should not be used when evaluating metrics for model performance.

To add avoidance periods:

-

Click the Add + button.

-

Enter a start and end date.

-

Click Save

on table editor.

on table editor. -

Click Save after configuring cross-validation settings and avoidance periods.

Settings are validated. If settings are determined to be valid, they are saved and the page refreshes with newly saved settings on right pane. If settings are invalid, a message displays with the validation errors of the configured settings and avoidance periods.

View Saved Settings

The Saved Settings pane shows what the current cross-validation settings are for the model build. It includes the following:

Current Splits: This chart shows the different test, train, validation, and holdout portions and which dates from the data set belong to each portion.

Has Holdout Set: Indicates if the cross-validation strategy has a holdout set (True, False). This is dependent on the number of dates in the data set.

Has Test Set: Indicates if the cross-validation strategy has a test set (True, False). This is dependent on the number of dates in the data set.

Has Training Set: Indicates if the cross-validation strategy has a training set (True, False).

Has Validation Set: Indicates if the cross-validation strategy has a validation set (True, False). This is dependent on the number of dates in the data set.

Strategy Name: The name of the cross-validation strategy.

Total Splits: The total number of splits in the cross-validation strategy.

If you are satisfied with the cross-validation and avoidance period settings, continue in the Model Build phase Pipeline section by running the pipeline.